Microservice Service Discovery: API Gateway vs Service Mesh?

Introduction

What is a service registry?

What is service discovery?

Types of service discovery

What is an API gateway?

What is a service mesh?

Similarities and differences between a service mesh and an API gateway

Can an API gateway & service mesh co-exist?

Conclusion

Simplified Kubernetes management with Edge Stack API Gateway

When managing cloud-native connectivity and communication, there is always a recurring question of which technology is preferred for handling how microservice-based applications interact. “Should I start with an API gateway or use a Service Mesh?”.

When discussing both technologies, we refer to the end-user’s experience achieving a successful API call within an environment. Ultimately, these technologies can be classified as two pages of the same book, except they differ in how they operate individually. Understanding the underlying differences and similarities between both technologies in software communication is essential.

In this article, you will learn about service discovery in microservices and when to use a Service Mesh or API gateway.

Introduction

In a microservice architecture, for clients to communicate with the backend, they need a service such as a service mesh or API gateway to relay these API requests. This technology receives the clients’ requests and transports them to the service they need.

Here’s the problem: ports to backend services are dynamic–they change. These changes could be because nodes fail, new nodes are added to the network, or services are scaled up or down to meet demand. As a result, maintaining a stable and reliable connection between clients and services becomes challenging.

Added to that, the Kubernetes API gateway doesn’t know how to identify a particular backend service a client requests, so it forwards the request to another service called service discovery. The API gateway asks the service-discovery software (e.g., ZooKeeper, HashiCorp Consul, Eureka, SkyDNS) where it can locate different backend services according to API requests (by sending the name). Once the service discovery software provides the necessary information, the gateway forwards the request to that address.

Before diving in, let’s quickly talk about the service registry, as most service discovery concepts are based on it.

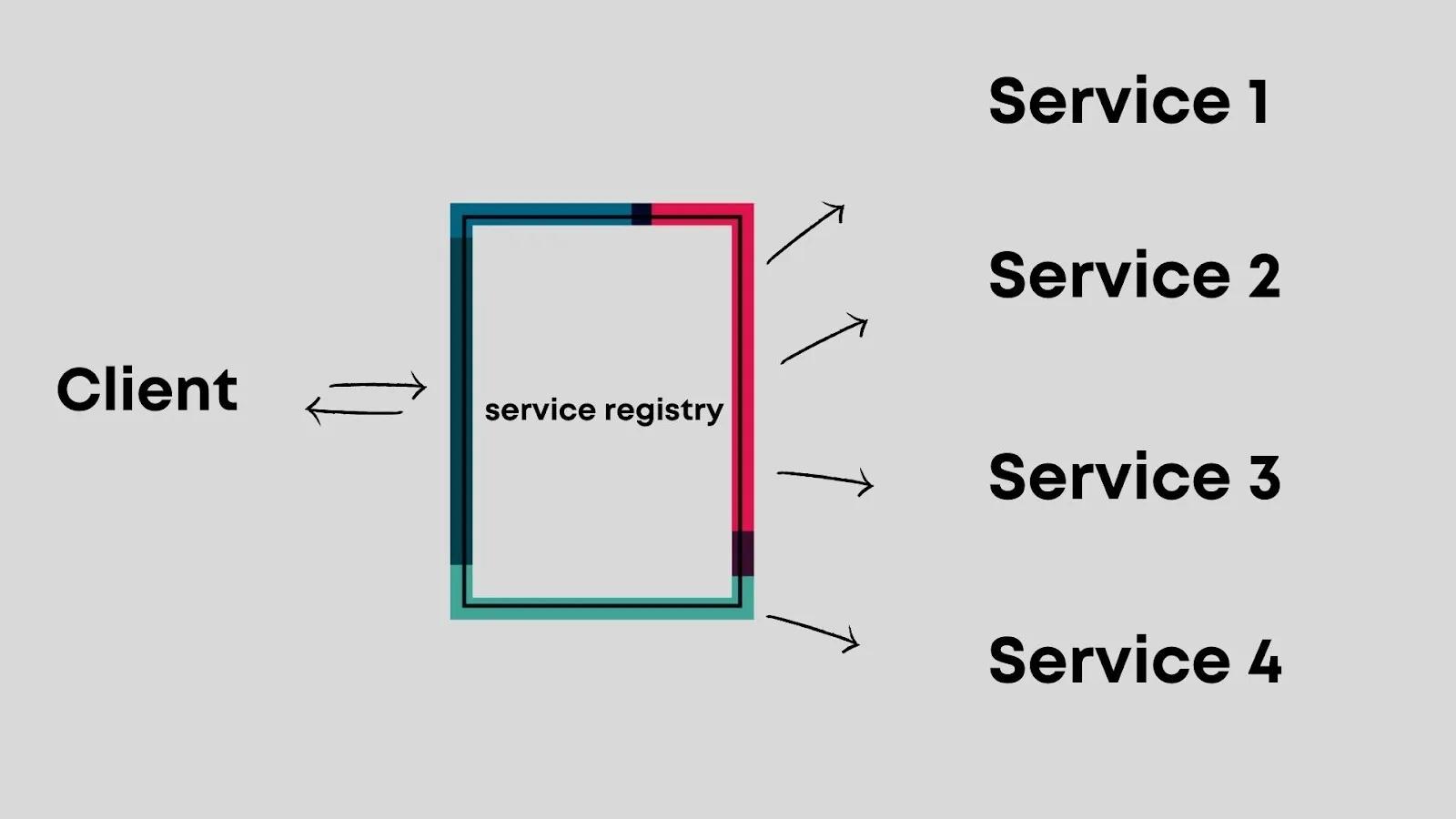

What is a service registry?

A pivotal component in the microservice architecture is the service registry. Consider it the dynamic address book for your microservices, cataloging the details required for inter-service communication.

The service registry acts as a centralized database, storing information about each service instance within the network. This includes data such as the service's hostname, IP address, port, and any other metadata that uniquely identifies and provides context for the service. Doing so facilitates the discovery process, allowing services to find and communicate with each other effortlessly.

Upon startup, every microservice instance registers itself with the service registry, updating its current location and status. This approach ensures that the registry maintains a real-time view of the system's landscape, accommodating the inherently dynamic nature of microservice deployments—where services frequently scale, update, and sometimes fail.

The service registry's availability and reliability are paramount. A failure or inaccessibility of the service registry can lead to systemic communication breakdowns, rendering the discovery and interaction between services challenging, if not impossible. Thus, implementing robust mechanisms to ensure the service registry's high availability and consistency is critical in system design.

By abstracting the network details and service locations into a service registry, we achieve a higher level of decoupling between services. This abstraction allows services to communicate based on logical names rather than physical addresses, significantly simplifying the architecture and enhancing its flexibility to adapt to changes.

What is service discovery?

The Microservices architecture is made up of smaller applications that need to communicate with each other constantly through REST APIs.

Service discovery (or service location discovery) is how applications and microservices can automatically locate & communicate with each other. It is a fundamental pattern in service architecture that helps track where every microservice can be found. These microservices register their details with the discovery server, making communication easy.

Types of service discovery

There are two types of service discovery patterns you should know about — server-side discovery and client-side discovery.

Let’s break them down in detail. 👇🏾

- A server-side discovery: This discovery pattern permits client applications to request a service via a load balancer. The load balancer queries the service registry before routing the client’s request. Think of the server-side discovery like the receptionist (load balancer) attending when you phone an organization. The receptionist will enquire about the details of the person you wish to communicate with and redirect your call to the person.

- A client-side discovery: With the client-side discovery, the client is responsible for selecting available network services by querying the service registry. It then uses the load-balancing algorithm to determine available service instances and requests. This is similar to how we interact with our search engines — you search for a topic on your browser, and your browser (service registry) will search and return a list of URLs and port numbers. As a user, you will look for URLs that answer your request accurately, then select the preferred URL that meets your demands.

Now that we understand what service discovery is, let’s look at what API gateway and service mesh are and which is preferred for your microservice architecture.

What is an API gateway?

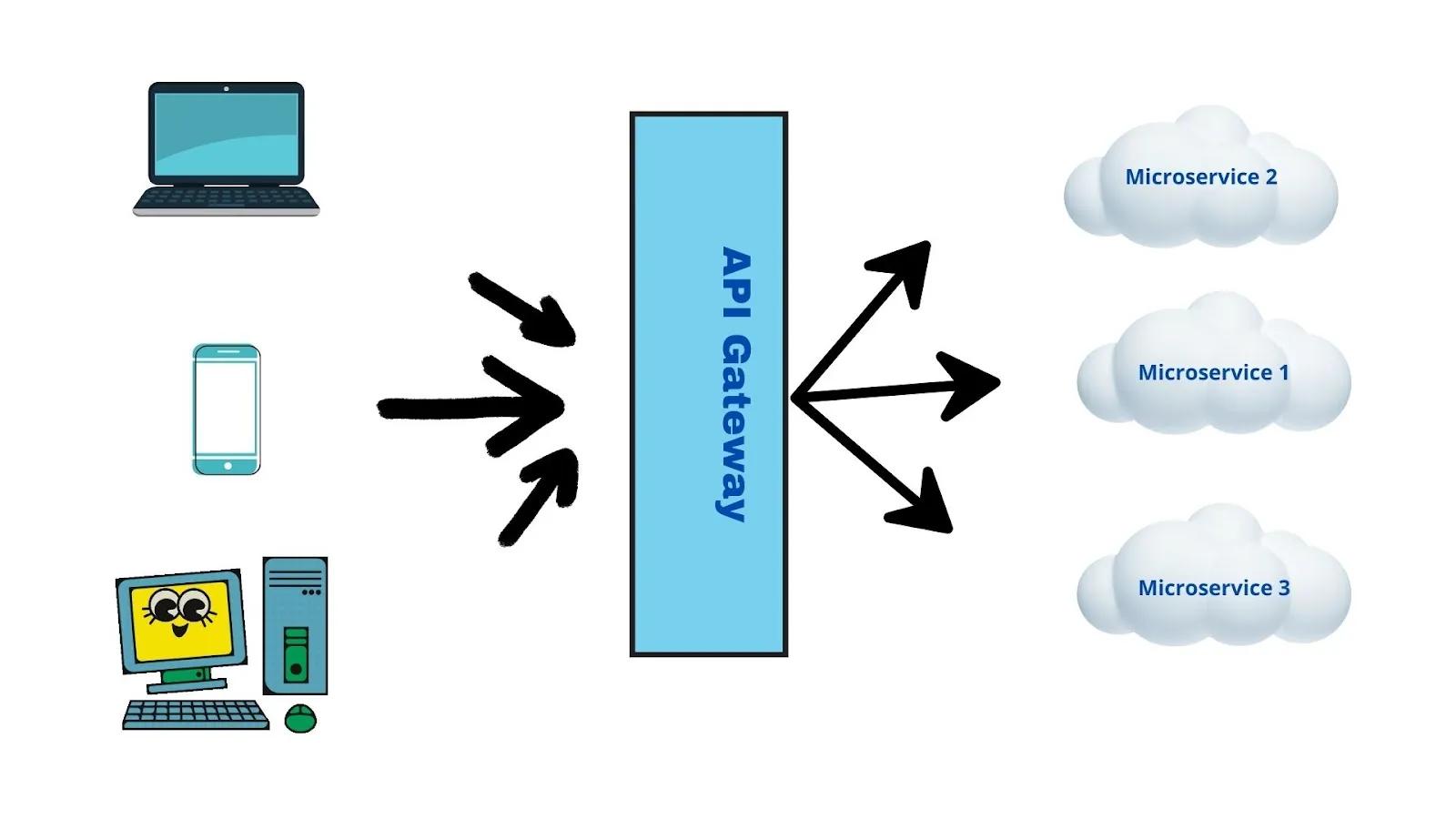

An API gateway is a management service that accepts client API requests, directs the requests to the correct backend services, aggregates retrieved results, and returns a synchronous response to the client.

To better understand the meaning of an API gateway, consider an e-commerce site where users invoke requests to different microservices like a shopping cart, checkout, or user profile. Most of these requests trigger API calls to more than one microservice, and due to the vast number of API calls made to the backend, an API gateway acts as a mid-layer between the clients and the services and retrieves all product details with a single request.

Developers can encode the API gateway features within the application to execute such tasks without using an API gateway. However, that would be a tedious task for the developers. This method also poses security risks of exposing the API to unauthorized access.

A core role of API gateways is in security, acting as a gatekeeper for all incoming requests to ensure they meet predefined security criteria such as authentication, authorization, and threat protection. By validating API keys, JWT tokens, or OAuth credentials, the API gateway effectively prevents unauthorized access to backend services, mitigates various security risks, including SQL injection and cross-site scripting (XSS) attacks, and ensures that only legitimate requests reach your microservices.

An integral part of securing APIs involves managing how often they can be called to prevent abuse and ensure service availability for all users. This is where rate-limiting comes into play. Rate limiting is a crucial feature for managing the flow of requests to your microservices, ensuring that your infrastructure can handle incoming traffic without being overwhelmed.

An API gateway excellently positions itself as the first line of defense against excessive use or potential abuse of your APIs. By setting thresholds on the number of requests that can be made within a specific time frame, the API gateway can prevent service outages and maintain the quality of service for all users. This protects your backend services from crashing due to overload and helps control costs by preventing unnecessary resource consumption. Implementing rate-limiting at the API gateway level allows for a centralized control mechanism, making managing traffic across all your services easier.

In essence, an API gateway helps to simplify communication management, such as API requests, routing, composition, and balancing demands across multiple instances of a microservice. It can also perform log tracing and aggregation without disrupting how API requests are handled. In essence, it is the home of API management within your application.

API management encompasses a wide range of functionalities that extend beyond simple request routing, including API versioning, user authentication, authorization, monitoring, and analytics. An API gateway serves as a critical component in API management by offering these capabilities in a unified interface. This simplifies deploying, securing, and monitoring APIs, enhancing the developer experience and end-user interaction with the microservices. With built-in support for managing API lifecycles, the gateway ensures that changes to APIs are seamlessly introduced without disrupting existing services. Furthermore, adding observability and monitoring to your API gateway provides valuable insights into API usage patterns, helping to optimize the API ecosystem and improve service delivery.

What is a service mesh?

A service mesh is an infrastructure layer that handles how internal services within an application communicate. It adds microservice discovery, load balancing, encryption, authentication, observability, security, and reliability features to “cloud-native” applications, making them reliable and fast.

Fundamentally, service meshes allow developers to create robust enterprise applications by handling management and communication between multiple microservices.

It is usually implemented by providing a proxy instance, called a sidecar, for service instances. These proxies handle inter-service communications and act as a point where the service mesh features are introduced.

Returning to the e-commerce illustration used earlier, let’s imagine the user proceeds to check out their order from the shopping cart. In this case, the microservice retrieving the shopping cart data for checkout must communicate with the microservice that holds user account data to confirm the user’s identity. This is where the service mesh comes into play! It aids the communication between these two microservices, ensuring the user’s details are confirmed correctly from the database.

Service mesh features can also be hardcoded into an application like API gateways. However, this will be a tedious job for the developers as they might be required to modify application code or configuration as network addresses change.

A core feature of a service mesh is its ability to act as a circuit breaker. Circuit breakers are an essential pattern in microservices architectures for enhancing system resilience. They prevent a network or service failure from cascading through the system by temporarily blocking potentially faulty services until they recover. A service mesh inherently supports circuit breakers by monitoring services' health and traffic patterns. When a service fails to respond or meets predefined criteria for failure rates, the service mesh can automatically "trip" the circuit breaker, redirecting or halting traffic to the failing service.

This mechanism ensures that the system remains responsive and prevents failures from one service from affecting others. By integrating circuit breakers, a service mesh contributes significantly to the stability and reliability of the overall microservices ecosystem, ensuring that services degrade gracefully in the face of errors.

Examples of service meshes in the native cloud ecosystem include Linkerd, Kuma, Consul, Istio, etc.

Similarities and differences between a service mesh and an API gateway

Implementing an API gateway or a service mesh for enterprise-level application development is a recurring question amongst developers.

This section of the article will help you understand the differences and similarities between them and help you decide which to go with.

Similarities between an API gateway & a service mesh:

- Resilience: With either or both technologies in place, your application can recover quickly from difficulties or failures encountered in your cloud-native application.

- Traffic management: Without a service mesh or API gateway, the traffic from API calls made by clients would be difficult to manage. This will eventually delay the request processing and response time.

- Client-side discovery: The client is responsible for requesting and selecting available network services in the API gateway and service mesh.

- Service discovery: Both technologies facilitate how applications and microservices can automatically locate and communicate with each other.

- System observability: Both technologies can manage services that clients can access. They also keep logs of clients that have accessed specific services. This helps to track the health of each API call made across to the microservice.

Differences between an API gateway & a Service mesh:

- Capabilities: API gateways serve as an edge microservice and perform tasks helpful to your microservice’s business logic, like request transformation, complex routing, or payload handling, while the service mesh only addresses a subset of inter-service communication problems.

- External vs. internal communication: A significant distinction between these technologies is their operation. The API gateway operates at the application level, while the service mesh operates at the infrastructure level. An API gateway stands between the user and internal application logic, while the service mesh is between the internal microservices. As discussed above, API gateways focus on business logic, while service mesh deals with service-to-service communication.

- Monitoring and observability: API gateways can help you track the overall health of an application by measuring the metrics to identify flawed APIs. Meanwhile, service mesh metrics assist teams in identifying issues with the various microservices and components that make up an application’s back end rather than the entire program. Service mesh helps determine the cause of specific application performance issues.

- Tooling and support: API gateways work with almost every application or architecture and can work with monolithic and microservice applications. Service mesh is typically designed only for specific environments, such as Kubernetes. Also, API gateways have automated security policies and features that are easy to start; service meshes often have complex configurations and processes with a steep learning curve.

- Maturity: API gateways are a more established technology. Based on how popular this technology has grown, there are many vendors of API gateways. In comparison, the service mesh is a new and nascent open-source technology with very few vendors today.

Can an API gateway & service mesh co-exist?

Both technologies have many things in common, but their significant difference lies in their operation. The API gateway is a centralized control plane that works at the application level, managing traffic from edge level client-to-service. The service mesh operates on the infrastructure level, dividing application functionality into microservices & managing internal service-to-service communication.

The API gateway can operate as a mediator when combined with a service mesh. This can improve delivery security and speed, ensuring application uptime and resiliency while ensuring your applications are easily consumable. This will, in turn, bring additional functionality to your application stack.

Conclusion

API gateways and service meshes overlap in several ways, and when these technologies are combined, you get a great end-to-end communication experience.

To maximize the agility of your application and minimize the effort developers spend on managing communications, you may need both a service mesh and an API gateway for your application.

Simplified Kubernetes management with Edge Stack API Gateway

Routing traffic into your Kubernetes cluster requires modern traffic management. That’s why we built Ambassador Edge Stack to contain a modern Kubernetes ingress controller that supports a broad range of protocols, including HTTP/3, gRPC, gRPC-Web, and TLS termination.